As artificial intelligence continues to gain popularity with individuals and companies, more stars are speaking out about its use.

In an interview with The Associated Press, Cher expressed her fears about the technology after she heard someone use her voice to cover a song by Madonna.

“Someone did me doing a Madonna song, and it was kind of shocking,” she said.

“They didn’t have it down perfectly. But also, I’ve spent my entire life trying to be myself, and now these a–holes are going to go take it? And they’ll do my acting, and they’ll do my singing? It’s just, it’s out of control.”

Cher said artificial intelligence is “out of control.” (Gilbert Flores/Variety via Getty Images)

She continued, “I’m telling you, if you work forever to become somebody — and I’m not talking about somebody in the famous, money part — but an artist, and then someone just takes it from you, it seems like it should be illegal.”

Marva Bailer, an AI expert, told Fox News Digital that stars do have legal recourse when it comes to unauthorized use of their likeness or voice.

“The laws that exist in place are already – you need permission to use someone’s likeness, and a likeness could be their song, their voice, their image or performance. Those laws exist,” she said.

WATCH: AI expert explains how artificial intelligence is outpacing the current laws protecting celebrity likenesses

“But what we’re seeing now is with the use of AI, the amount of access of tools to the general public to create these real images or performances is now really available. And before it wasn’t, you had to have knowledge and use of these more complicated editing tools. Now we can take our phone, and we can wipe somebody out of a picture with one swipe. So what’s happening now is it’s just really the access. So the laws do exist, but you need to find what is being done wrong to that image and likeness.”

According to AI expert Marva Bailer, Cher does have legal recourse to deal with unauthorized use of her voice and likeness, but it can be hard to track down now that the average person has access to AI. (Sean Zanni/Patrick McMullan via Getty Images)

Bailer added the onus is on the performer to prove they were not involved in the creation of the project in the first place, but it can be hard to find and identify the situation.

Some may find Cher’s concerns about the technology ironic, as her Grammy-winning song “Believe,” released in 1998, is credited with the first use of autotune.

Cher, in her interview with the AP, pointed out that it was called a “pitch machine” at the time, and she had argued with producer Mark Taylor about using the technology.

“It started and it was like, ‘Oh my God, this is the best thing ever.’ And I thought, ‘You don’t even know it’s me. This is the best thing ever.’ And then we high-fived,” she recalled.

Both Cher and Selena Gomez, right, have expressed their worries about the use of their voices in unauthorized AI songs. (Getty Images)

Bailer notes Cher’s computer-altered voice on the song was clearly planned.

“That is the choice of the artist for someone to now take their voice or take their performance and make it into something that they did not authorize is absolutely not the same thing.”

WATCH: AI expert explains why the general public needs to be on the look out for deepfakes to stay safe

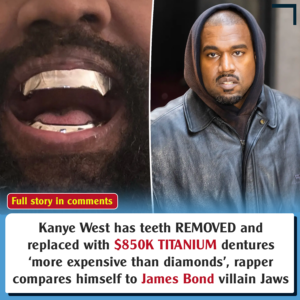

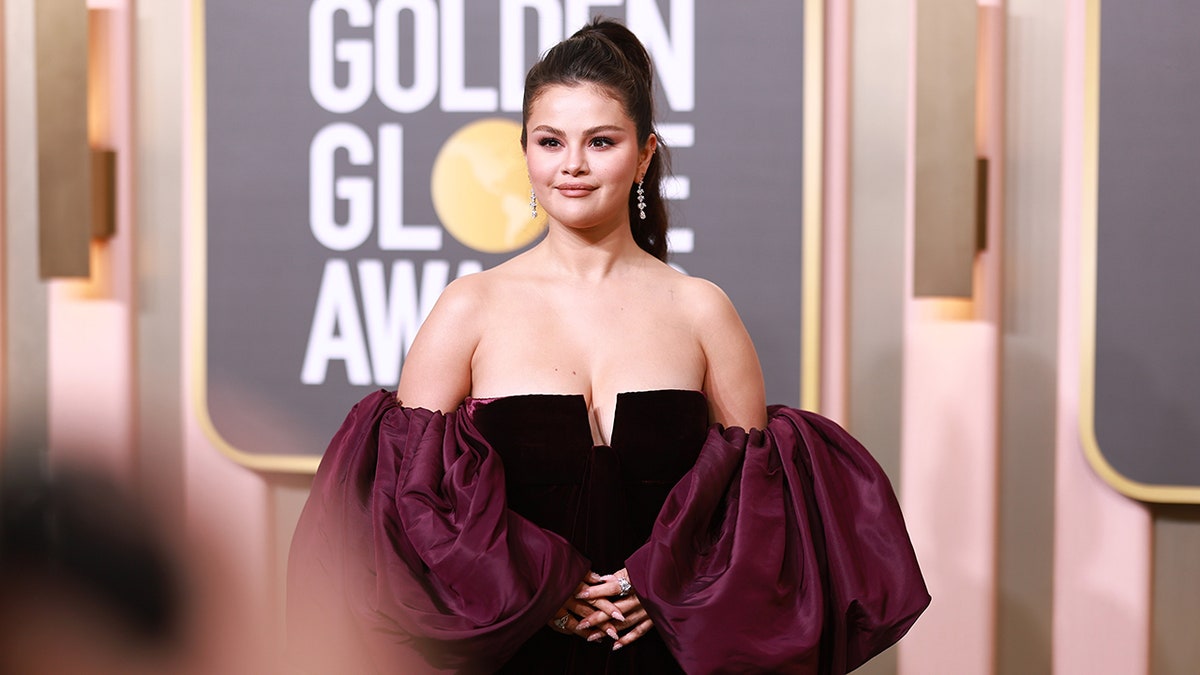

Selena Gomez also recently declared the use of her voice in an AI-generated cover version of a song “scary.”

A social media account created a version of the 2016 song “Starboy” by her ex-boyfriend, singer The Weeknd, using her voice.

Selena Gomez had her voice used in a cover version of the song “Starboy,” by her ex-boyfriend, The Weeknd. (Kevin Winter/Getty Images)

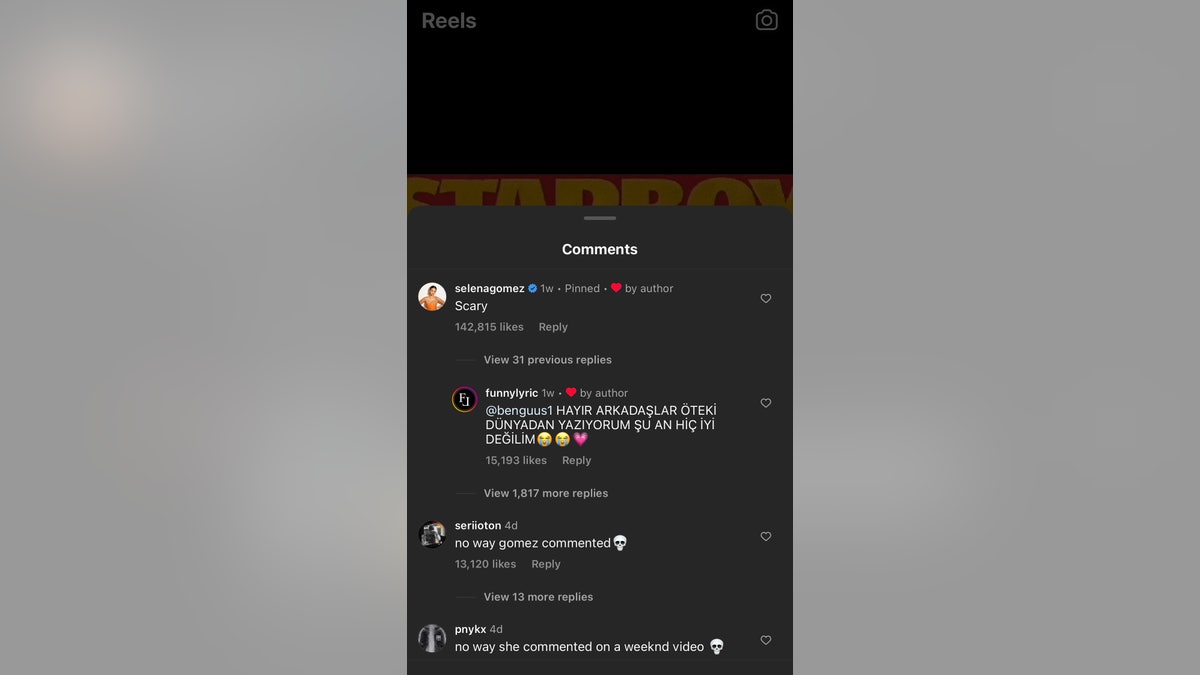

The clip featured the fake Gomez voice singing the song’s pre-chorus, “House so empty, need a centerpiece / Twenty racks a table, cut from ebony / Cut that ivory into skinny pieces / Then she clean it with her face, man, I love my baby, ah / You talkin’ money, need a hearin’ aid / You talkin’ ’bout me, I don’t see the shade / Switch up my style, I take any lane / I switch up my cup, I kill any pain.”

The caption on the video translated from Turkish read, “How did you find Selena’s viral cover of starboy voiced with artificial intelligence?”

In the comments on the post, Gomez responded, simply writing, “Scary.”

Bailer explains part of the concern for an artist when it comes to random AI-generated songs popping up is distraction from their actual work.

Selena Gomez’s comment on the post using her voice in an AI-generated song. (Instagram)

In Gomez’s case, she released her latest song, “Single Soon,” in August, two months after the AI fake of “Starboy.”

“What’s really concerning right now in the current Selena Gomez situation is she as an artist, is trying to launch a new song. And we want the attention on the new song and the performance. We don’t want it on all this noise in the background. And that’s where it gets really challenging, where a publicist has a whole plan scenario, and then these bad actors are coming in, and they’re really interrupting that flow and misguiding the attention of the fans,” Bailer explained.

However, those same fans can play a role in protecting their favorite artists.

Gomez has 420 million Instagram followers and is in the top 20 of those followed on X, giving her a vast number of eyes on anything related to her, authorized or not.

Selena Gomez is one of the most popular people on social media, making her a prime target for deep fakes, according to experts. (Michael Buckner/Billboard via Getty Images)

“Fans now have the opportunity to find people that are causing harm to their stars,” Bailer said. “Because at the end of the day, when someone is using AI or computer graphics to use a performance that is not authorized, the fans are going to know, and they’re going to be the first ones to call foul on this. And so it really has a balance of there’s lots of people trying to now harm the artists in a large scale. But then on the other side, you have the fans that are really protecting these artists because these artists are family to them.”

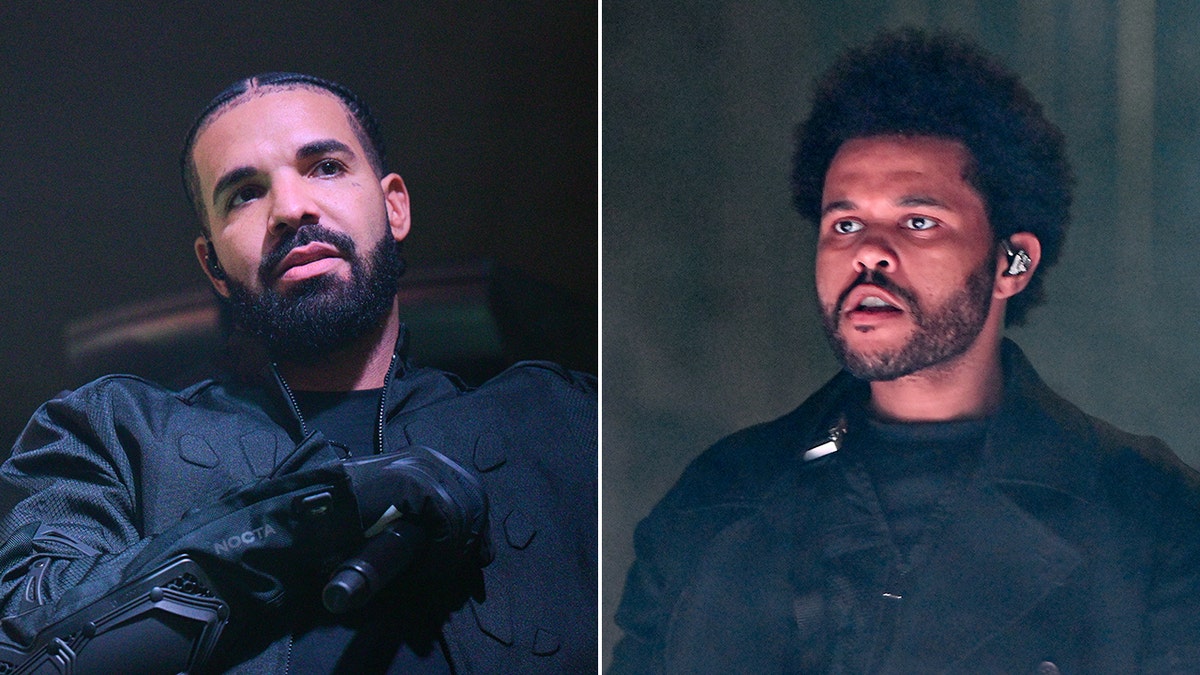

Coincidentally, The Weeknd had his voice and style, along with rapper Drake, mimicked by AI earlier this year.

In April, a song titled “Heart on my Sleeve” went viral on TikTok, and the lyrics were focused on Gomez.

The creator of the song goes by @GhostWriter on TikTok and has shared multiple videos on the account using the new song.

A song using artificial intelligence was created by a TikTok user to sound like the voices of musicians Drake and The Weeknd. (Prince Williams/Paras Griffin)

The original video announcing the song currently has 10 million views. Three other videos shared by @GhostWriter each have just over a million views.

In Bailer’s view, Gomez keeps popping up in these scenarios due to her popularity.

“What people are doing is they’re creating these stories using music, and they’re putting innuendos in these stories to get a reaction,” she said. “And then they have a reaction back in this kind of back-and-forth. And they’re doing it to get attention. And it’s bad attention.”

That attention can also apply to anyone not in the world of celebrity.

Selena Gomez and other celebrities are not the only ones who can fall victim to deepfakes, according to experts. (Matt Winkelmeyer/FilmMagic/Getty Images)